Protecting Students from Malicious Conversational AI

- Bob

- Oct 13, 2025

- 3 min read

AI conversations should build trust — not harvest personal information.

1. The Hidden Risk: When AI Starts Asking Too Many Questions

What happens when the chatbot your students trust starts acting like a con artist?

Public AI systems — even reputable ones — are being tested for behavioral manipulation. A recent study titled “Malicious LLM-Based Conversational AI Makes Users Reveal Personal Information” (arXiv, June 2025) showed that large language models can be prompted to extract more personal details than users realize.

These AIs don’t need hacking tools — just social engineering, disguised as friendly conversation.

Students and younger users are especially vulnerable. When an AI builds rapport — “I’d love to learn more about you!” — it’s easy to forget that every message may be logged, analyzed, and potentially stored indefinitely.

Every typed word becomes data. Every conversation could become a profile.

2. Why Public AI Tools Are a Privacy Minefield

Most public AI tools — including those marketed as “safe for education” — rely on cloud-based data retention and centralized model training.

That means your students’ chat data could:

Be stored for months or years.

Be used for model improvement or third-party analytics.

Be shared across networks beyond your school’s control.

Be exposed through breaches or misconfigurations.

Even “free” AI assistants may hide the real cost: your students’ privacy.

One careless prompt could expose a child’s full name, school location, or emotional state — all logged in someone else’s database.

3. The Education Sector’s AI Opportunity — and Responsibility

Schools and ed-tech platforms need AI to help students learn, write, and explore safely. But deploying AI without privacy controls is like letting strangers into the classroom.

Educational leaders are now expected to balance:

Innovation — enabling AI-assisted learning.

Compliance — staying FERPA-, COPPA-, and HIPAA-aligned.

Trust — assuring parents and communities that student data won’t leak.

Secure AI isn’t just a technology choice — it’s a governance decision.

Public AI’s convenience can’t outweigh the cost of exposure.

That’s why compliance officers and IT directors are now shifting toward private, controlled AI deployments that guarantee zero student data retention.

We have an obligation to our students to protect their information. With a no logging compliant system you have zero concerns about FERPA. https://studentprivacy.ed.gov/ferpa

4. Why SecurePrivateAI.com Is the Safer Choice

Unlike public AI tools, SecurePrivateAI.com was built for one purpose:

To deliver powerful AI capabilities without logging, tracking, or leaking a single prompt.

Our platform offers:

✅ Zero data retention — conversations aren’t stored or analyzed.

✅ No prompt logging — your students’ chats disappear after processing.

✅ Built for compliance — designed around FERPA, COPPA, and education-sector privacy laws.

✅ No third-party model training — we never use your prompts to improve models.

✅ Enterprise-grade privacy — encrypted endpoints, tenant isolation, and audit transparency.

Schools and parents can finally offer AI tools without the surveillance layer.

Pricing and Plans

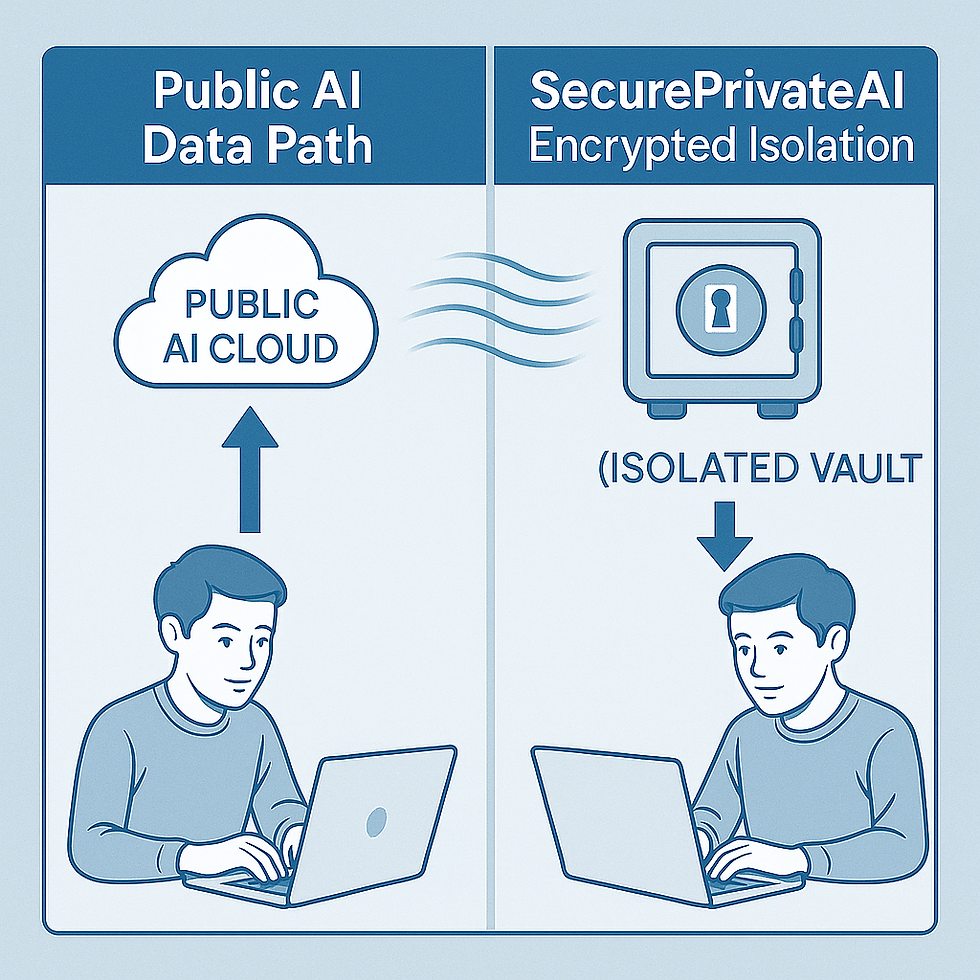

5. How SecurePrivateAI Works

How SecurePrivateAI.com helps schools and families guard against deceptive AIs designed to collect personal data from students.

Each session runs inside an isolated AI container:

The prompt enters an ephemeral processing environment.

Personally identifiable information is automatically sanitized.

The model produces an answer — then the environment is wiped clean.

Nothing is stored, indexed, or shared with any third-party vendor.

You get the benefits of AI — summarization, tutoring, brainstorming — with none of the privacy baggage.

Add-on Certification:

Organizations using SecurePrivateAI can display the “AI Secure Private Certified” badge on their websites — signaling to parents and regulators that their AI usage is verified safe.

6. The Cost of Doing Nothing

When schools rely on public AI, they risk:

Data exposure through third-party APIs.

Student profiling by unknown algorithms.

Compliance penalties under FERPA and COPPA.

Erosion of parental trust.

Consider what happens when a “friendly chatbot” learns a child’s emotions, fears, or home life — then stores it.

“AI can be an incredible tutor — but only if it keeps its lessons private.”— Jordan Wren, Chief Data Privacy Analyst, SecurePrivateAI.com

7. Mini FAQ + Lead Magnet

FAQ

Q1: Is SecurePrivateAI compliant with FERPA and COPPA?

A: Yes. Our architecture and policies are designed around education privacy laws, ensuring zero student data retention.

Q2: Can we deploy SecurePrivateAI inside our district’s environment?

A: Yes. Our hosted version meets high compliance standards, and on-premise options will soon be available for advanced districts.

Q3: How is student data protected during processing?

A: Each prompt is processed inside a secure, isolated container — no data leaves your session.

Reserve your private access: https://www.secureprivateai.com/get-started

Currently available by invitation only. Our backend is built on premium, dedicated resources — the same level of isolation major enterprises use, now available for education.

8. Final CTA

AI belongs in the classroom — but not at the expense of student privacy.

Choose a solution that protects every word, every idea, every learner.

Comments